Human Web—Collecting data in a socially responsible manner

Measures to prevent record linking & privacy leaks.

Evilness does not necessarily come out of the data itself but rather from how and why it is collected. There is great resistance for fine-grained discussions about this topic because we are trapped on a false dichotomy: data or privacy.

The previous post “Is Data Collection Evil?” is an introduction to the topic of data collection. If you have not read it yet, we highly recommend you do so first.

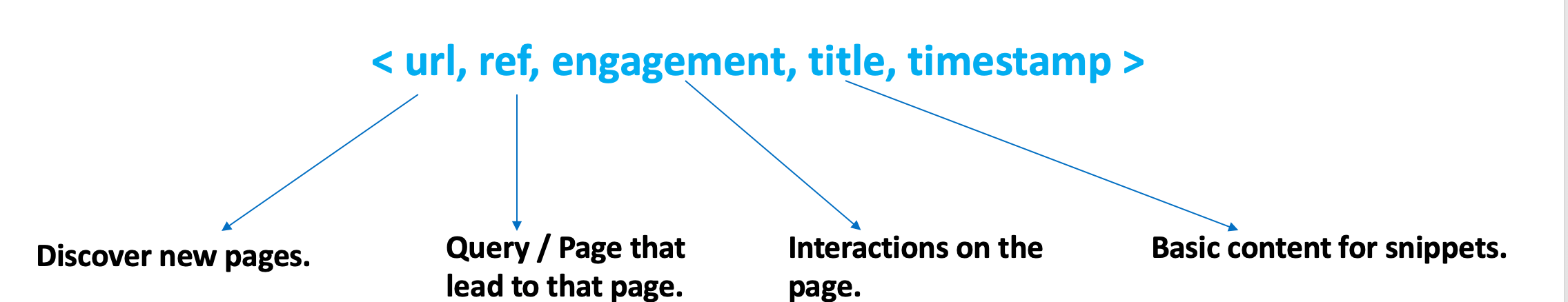

Cliqz needs data to power the services it offers: search, tracking protection, anti-phishing, etc. Talking about search specifically, instead of blindly crawling the web to build a search engine, users of the Cliqz browser contribute data to the search index by sending statistics about pages they visit, queries that lead them to pages– regardless of the search engine used–title and metadata for snippets, etc.

We understand that the above paragraph raises several questions. We, too, used to assume that sending data is bad with regards to privacy. But before claiming mistakenly that “the Cliqz browser slurps users’ history”, please hear us out.

In this post we would like to elaborate more on:

- The risks associated with collecting data, especially when the subject of data collection involves human actions on the Web.

- Human Web, a system running in the browser and built with privacy in mind from the ground up, to mitigate the risks that come alongside data collection, even before any data is sent to Cliqz servers.

- Transparency and tools for anyone to verify the claims we make or opt-out if desired.

Human Web was started early 2015 as a responsible way to collect data from users and has continuously evolved ever since. It was designed with the premise that it should be technically infeasible for Cliqz to collect data which can be used for creating user profiles. We will also see in the following sections that when the data involves URLs, Human Web is able to strictly detect and only send back the ones meant for public consumption[1]. Any data collection done at Cliqz is and always has been in line with this methodology.

Hopefully, in the process of sharing our approach via this post, we are able to motivate other data collection practitioners to adopt the Human Web approach and collect data in a safer and more socially responsible manner.

Preventing Record Linkage

The fundamental idea of the Human Web is simple: to actively prevent Record Linkage. Record linkage is the ability to know that multiple data points are coming from the same user.

In order to be able to link the messages back together on the backend, the usual approach is for a client to attach a User Identifier (UID) to each message.

A User Identifier (UID) is a piece of information that gives the server the ability to tag messages coming from the same user. UIDs can be of multiple types like:

- Explicit UIDs : Identifiers that the application adds. They are meant to be unique for each user and are persisted in the user’s browser. The lifespan of such identifiers is application dependent and is then automatically sent back with every request to the backend. (e.g.: using cookies).

- Implicit UIDs : Even if the application does not add a UID explicitly, based on what data is sent and how it’s sent, there could be enough information to form UIDs on the server-side. They can be further divided into two parts:

- Content independent UIDs

- Content dependent UIDs

- Network UIDs: UIDs that a server can create based on network-level information such as IPs and other network-level data.

One could argue that the UID is needed to perform some data analysis, which is the underlying motivation for collecting data in the first place, but we cannot ignore the privacy side-effects that come along with sending UIDs to the backend.

A privacy side-effect is the knowledge that can be gained from data analysis which was neither intended nor expected, and that poses a risk to the privacy of the subjects of the data collection.

Example: Privacy Side Effects

Let us say you are a service provider named Acme Analytics that provides analytics to website owners.

The typical way to provide this service would be via a JavaScript snippet that website owners need to embed on their websites. This snippet would then send back tuples of the following form to Acme’s servers:

<timestamp, uid, url>

Given that Acme Analytics is present on pages from other websites as well, the data stream to their servers would look somewhat like:

| TimeStamp | UID | URL |

|---|---|---|

| 2018-07-09 14:06 | A1234 | sportsnews.com/sports/ |

| 2018-07-09 14:07 | A1234 | news.com/myaccount/solso/ |

| 2018-07-09 14:07 | A1234 | ecommerce.com/receipt_id=1230981 |

| 2018-07-09 14:08 | A1234 | sportsnews.com/sports/ |

| 2018-07-09 14:06 | B5678 | sportsnews.com/sports/ |

| 2018-07-09 14:07 | B5678 | sportsnews.com/sports/ |

When Acme needs to provide insights like unique visitors on a website, they can simply perform an aggregation based on:

<UID, get_domain(url), timestamp>.

With the above data this would become:

| TimeStamp | Unique Visitors |

|---|---|

| sportsnews.com | 2 |

| news.com | 1 |

| ecommerce.com | 1 |

The legitimate use case was to count the unique visitors; which can be easily solved. But because of the way the data is collected, there are some privacy side-effects. Now, not only can Acme analytics use the data for analyses like counting uniques, but the same data can now be misused to track all websites and URLs that user A1234 has visited:

| Websites | UID |

|---|---|

| sportsnews.com/sports/ | A1234 |

| news.com/myaccount/alice/ | A1234 |

| ecommerce.com/receipt_id=1230981 | A1234 |

| sportsnews.com/sports/ | A1234 |

Moreover, if any URL contains private information, it will allow de-anonymizing the user. For example:

news.com/myaccount/alice/ -> Belongs to user Alice

ecommerce.com/receipt_id=1230981 -> Extract purchase data from this page

Not all private pages are behind a login, having receipt ID can be enough to access purchase details.

It does not matter whether the UID is short lived or long lived; what is important is that this UID leads to linkage, which leads to sessions– and these sessions are very dangerous with regards to privacy.

Even if you are not sending a UID explicitly your messages can contain enough information to implicitly identify users.[2].

One way to deal with such side-effects would be to have privacy and data retention policies and have pseudo-guarantees that this data will not be leaked or misused.

This is not something we want to do at Cliqz, because we are not comfortable with shallow guarantees based on a Terms of Service and Privacy Policy agreements. Although these are legally binding, they are hard for the users to verify, it is not enough for us and should not be enough for our users either.

Human Web is a methodology and system designed to collect data, while guaranteeing that signals cannot be turned into sessions once they reach Cliqz. How? By strictly forbidding any UID–explicit or implicit–which could be used to link records belonging to the same user.

By preventing record linkage, aggregation of user’s data in the server-side (on Cliqz premises) is technically impossible, as we have no means of knowing who the original owner of the data is. This is a strong departure from the industry standard of collecting data.

Let us illustrate with an example how we can collect some data points for our search from within the Cliqz browser–adhering to the above principles, not hiding behind a privacy policy and not losing sight of users’ privacy.

Bad Queries

Since Cliqz is a search engine, we need to know for which queries our results are not good enough. A legitimate use case which we will call: bad-queries. How do we achieve this?

We want to observe events where a user queries in Cliqz and then, within one hour, performs the same query again, but on a different search engine. This would be an indicator that Cliqz’s results for query need to be improved. There are several approaches to collect the data needed for this quality assessment.

It is important to understand the consequences of record linkability. Therefore, at the risk of sounding redundant, let us first look at how this problem statement can be solved using the traditional approach of server-side aggregation.

Server-Side Aggregation

With this approach, we would collect URLs of search engine result pages; the query and search engine name can then be extracted from the URL. We would also need to keep a timestamp and a UID to know which queries were performed by the same person. With this data, it is then straightforward to implement a script that finds the bad queries we are looking for.

The data that we would collect with the server-side aggregation approach would look like this:

...

SERP=cliqz.com/q=pizza near arabellapark, UID=X, TIMESTAMP=2019...

SERP=google.com/q=pizza near arabellapark, UID=X, TIMESTAMP=2019...

SERP=google.com/q=opthamologist munich, UID=X, TIMESTAMP=2019...

SERP=google.com/q=trump for president, UID=Y, TIMESTAMP=2019...

...

A script would iterate over these URLs, checking for repetitions of the tuple <UID, query> within a certain time frame. By doing so, in the example, we would find that the query pizza near arabellapark seems to be problematic. This is the information we were looking for.

While this data can be used to solve our use case of bad-queries, we could also use the same data to build a session for a given user. Let us consider an “anonymous” user :

user=X, queries={'pizza near arabellapark','ophthalmologist munich'}

Suddenly, because of the UID attached to each tuple, we have the full history of that person’s search queries! On top of that, it is likely that one of the queries contains personally identifiable information (PII) which would allow identifying user . That was never the intention of whoever collected the data. But now the data exists, and the user can only take it on faith that their search history will not be misused by the company that collected it.

This is what happens when you collect data that can be aggregated by UID on the server-side. It can be used to build sessions - having a single query that leaks PII is enough to compromise the data for the life span of that session both in the past and future.

How does Cliqz solve this use case?

To solve the above problem statement, what we really need is a way to store queries per user for a given time frame. In the traditional approach, that happens to be the server, but the same can be done by leveraging the storage of the client – in this case, the browser.

With this approach, the job that aggregates on <UID, Timestamp> on the server-side can be shipped to the browser and run fully client-side. If the required conditions to detect bad-queries are met, clients will only send the information needed to solve the intended use case:

...

type=bad_query, query=pizza near arabellapark, target=google

...

This is exactly what we were looking for: cases of bad queries. Nothing more, nothing less. The snippet above satisfies the bad-queries task and will most likely not be reusable to solve other use cases. But it now comes without any privacy implications or side-effects.

Client-Side Aggregation

The aggregation of users’ data can always be done on the client-side, i.e. on the users’ devices and therefore under their full control. That is the place to do it. As a matter of fact, this is the only place where it should be allowed.

We call the approach of leveraging browser storage whenever aggregation needs to be done at a user level client-side aggregation.

This approach has some drawbacks, however:

- It requires a change of mindset by the developers.

- Processing and mining data implies that code be deployed and run on the client-side.

- The data collected might not be suitable to satisfy other use cases. Because data collected has been aggregated by users, it might not be reusable.

- Aggregating past data might not be possible as the data to be aggregated may no longer be available on the client.

However, these drawbacks are a very small price to pay in return for the peace of mind of knowing that the data being collected cannot be transformed into sessions with uncontrollable privacy side-effects.

Such an approach satisfies a wide-range of use cases. As a matter of fact, we have yet to find a use case that cannot be satisfied by client-side aggregation alone.

While removing the need to add explicit UIDs to be able to link messages on the server is a good first step, it does not suffice. Even the above mentioned approach, if implemented as-is, has some shortcomings which we will discuss in the following sections.

Network UIDs

Data needs to be transported from the user’s device to the data collection servers. This communication, if direct, can be used to establish record-linkage via network level information such as the IP and other network level data, doubling as UIDs.

We will cover this topic in a blog post tomorrow - Human Web Proxy Network (HPN), which not only talks about removing network level information but also how we built an anonymous rate limiting system.

Like any data collection system, Cliqz systems are also susceptible to attack vectors like spam/injection of fabricated data. The data sent to Cliqz servers does not contain any sort of UID, therefore the traditional approach to fraud-detection and rate-limiting based on the source of the message and historical activity of that source cannot be used. Human Web Proxy Network(HPN) is a system that protects Cliqz data streams from such attacks without tracking the users.

Implicit UIDs

We have seen that we got rid of the need for explicit UIDs by using client-side aggregation and communication via the Human Web Proxy Network to prevent network fingerprinting. However, there is still another big group of user identifiers: the implicit UIDs.

Content Independent Implicit UIDs

Even in the case of anonymous communication, the way and time in which the data arrives can still be used to achieve a certain level of record linkage - a weak one, but a session nevertheless. One such example is

Messages sent as batches:

If the client sends the message as a batch request, the server can still infer that it is coming from the same machine / user.

[type=bad_query,query=safequery1, target=google, timestamp=20191110,

type=bad_query,query=safequery2, target=google, timestamp=20191110,

type=bad_query,query=safequery3, target=google, timestamp=20191110]

To avoid this, each message is sent as a new request.

Timing based correlations:

Even if you send messages one by one, based on the time at which they arrive to the backend, we can still correlate that the messages are coming from the same machine. Even though the correlation is weak, it is nevertheless possible.

Messages are sent at random intervals to avoid such correlations.

Incorrect clock settings:

All timestamps sent inside the payload are also capped to hour or day level depending on the use case. Even though the timestamps are capped, if the system clock is incorrect it can be used as a fingerprinting vector. We avoid this by fetching “current day” from the backend at regular intervals and storing it locally. Moreover, any data sent with incorrect date will be rejected by the backend.

HTTP Headers:

Combinations of multiple values could also enable the collector to create temporary UIDs. Example: User-agent, Accept-Language, Origin headers etc.

As an example, when Firefox moved from the Bootstrap to WebExtension extensions architecture, they started sending the extension ID in the Origin header. Given that the extension ID is unique for each user, it is a very strong identifier. So even if your data collection systems are free of identifiers, platforms might introduce some side channel leaks.[3].

To avoid such occurrences, we ensure that we strip out the HTTP headers[4] that are not directly needed from the client.

Content Dependent Implicit UIDs

The content dependent implicit UIDs are, as the name suggests, specific to the content of the message, and are thus application-dependent. For that reason, it is not possible to offer a general solution since it varies from message to message–or in other words, it varies from use case to use case.

In the bad-queries example, for instance, the content of the message (the query) is concerning. Before sending bad-queries messages back, we run the content of the query through a set of heuristics CliqzHumanWeb.isSuspiciousQuery[5] to determine whether it is safe or not. If any of the above checks fails, the message will not be sent.

Even after all these checks, the query itself could contain self-incriminating information and fall through the cracks of heuristics. But even in that case, we still would not be able to associate all the queries from the same user.

Server-side aggregation is not safe from this either; having one query that leaks PII will compromise data for the life span of that session both in the past and in the future.

Given that the content of messages can include URLs, queries or content of the page visited by the users, we would like to provide some additional examples of good practices and elaborate on how we make sure that implicit UIDs, or other private information, never reach Cliqz servers for some of our more complex messages.

Examples of Human Web Messages

We will cover three different types of messages of user data collected by Cliqz, putting special emphasis on how we prevent content dependent implicit UIDs.

In this section, we will refer to the code deployed to our users in October 2019, or version 2.7. The snapshot of the code is available here.

The latest version of the Human Web is always available in our open-source repository. Cliqz has a policy to open-source any release delivered to our users.

1. Telemetry Message

The first type of message generated by the user is hw.telemetry.actionstats,

{

"ver": "2.7",

"ts": "20191128",

"anti-duplicates": 2399856,

"action": "hw.telemetry.actionstats",

"type": "humanweb",

"payload": {

"data": {

"droppedLocalCheck": 3,

"tRcvd": 16,

"unknownerror-page": 1,

"unknownerror-attrack.safekey": 1,

"unknownerror-attrack.tokens": 1,

"hw.telemetry.actionstats": 1,

"attrack.safekey": 2,

"tSent": 10,

"itemsLocalValidation": 6,

"alive": 3,

"exactduplicate": 3,

"anon-ads_A": 1,

"anon-query": 1,

"total": 62,

"anon-ads_D": 1,

"page": 4,

"attrack.tokens": 5,

"mp": 9

}

}

}

This message is sent by the user once per day, with the aggregated counters of different message types sent. The content of the message can be found in the field payload.data.

The content itself is not privacy sensitive at all. Even though the signature of the counters could be unique, there is no guarantee that it will be maintained over time, and even if so, linking two records of the type hw.telemetry.actionstats would be harmless.

Note that common sense is important. Record-linkage is to be avoided when dangerous or unknown. For instance, in the message above we combined different telemetry attributes into a single message, but they could also be sent in different messages, with a loss of ability to perform correlations between telemetry attributes.

The person responsible for the data collection must consider the safety of combining multiple data points in a single message and evaluate the risk. If there is a risk and/or the repercussions of record-linkage are dangerous, the most prudent approach is to play it safe and keep the messages as small (i.e. low entropy) as possible. The content of the message is totally application specific, the application being telemetry in this case.

The following structure:

{

"ver": "2.7",

"ts": "20191128",

"anti-duplicates": 2399856,

"action": "hw.telemetry.actionstats",

"type": "humanweb",

"payload": ...

}

…is common to all Human Web Messages. It contains the version number, the timestamp capped to a day (to avoid temporal correlations of data) and the type of message in the field action. The field anti-duplicates is a random number, unique for each message (as a failsafe for message duplication; this was rendered obsolete by the temporal uniqueness validation from HPN and will be removed in following versions of Human Web).

2. Query Message

Let us take a look at a more interesting message type–like a message of the type query:

{

"ver": "2.7",

"ts": "20191128",

"anti-duplicates": 9564913,

"action": "query",

"type": "humanweb",

"payload": {

"q": "ostern",

"qurl": "https://www.google.de/ (PROTECTED)",

"ctry": "de",

"r": {

"1": {

"u": "http://www.kalender-365.eu/feiertage/ostern.html",

"t": "Ostern 2017, Ostern 2018 und weiter - Kalender 2019"

},

"0": {

"u": "http://www.kalender-365.eu/feiertage/2017.html",

"t": "Feiertage 2017 - Kalender 2019"

},

"3": {

"u": "http://www.schulferien.org/Feiertage/Ostern/Ostern.html",

"t": "Ostern 2019, 2017, 2018 - Schulferien.org"

},

"2": {

"u": "http://www.schulferien.org/Schulferien_nach_Jahren/2017/schulferien_2017.html",

"t": "Schulferien 2017 - Schulferien.org"

},

"5": {

"u": "http://www.feiertage.net/uebersicht.php?year=2017",

"t": "2017 - Aktuelle Feiertage 2013, 2014 bis 2037 - NRW, Bayern, Baden ..."

},

"4": {

"u": "http://www.wann-ist.info/Termine/wann-ist-ostern.html",

"t": "Wann ist Ostern 2017"

},

"7": {

"u": "http://www.kleiner-kalender.de/event/ostern/03c.html",

"t": "Ostern 2017 - 16.04.2017 - Kleiner Kalender"

},

"6": {

"u": "http://www.ostern-2015.de/",

"t": "Ostern 2017 - Termin und Datum"

},

"9": {

"u": "http://www.aktuelle-kalenderwoche.com/feiertage-brueckentage-2017.html",

"t": "Feiertage und Brückentage 2017 - Die aktuelle Kalenderwoche"

},

"8": {

"u": "http://www.feiertage.info/2017/ostern_2017.html",

"t": "Ostern 2017 - Feiertage Deutschland 2015, 2019 und weitere Jahre"

}

}

}

}

This message type is generated every time a user visits a Search Engine Result Page (SERP). Each URL in the address bar is evaluated against a set of regular, dynamically loaded patterns, which determine if the user is on the SERP of either Cliqz, Google, Bing, Yahoo or Linkedin.

Please allow us to emphasize that the message above is real, and that the data above is the only information we will receive. This message arrives to Cliqz data collection servers through the HPN network anonymization layer.

The message does not contain anything that could be related to an individual person, and it does not contain any sort of UID that could be used to build a session at the Cliqz backend.

The only thing that we, as Cliqz, could learn is that someone who is a Cliqz user queried for ostern on Google on the day 20191128 and the results that Google yielded. Sending any sort of explicit UID in that message, or not removing implicit UIDs, could lead to a session for the user that would contain all the queries, or at least a fraction of them. If any of the queries in the session contains personal identifiable information (PII), then the whole session could be de-anonymized. Even though using UIDs is the industry standard, we are not willing to take such a risk. We need the data–it is used to train our ranking algorithms, so it is crucial for us. But we only want the query, nothing else. Having the same message with a UID would be convenient, as the data could be re-purposed for other use cases, but the risks to the users’ privacy are simply not acceptable.

The message of type query is trickier than the previous hw.telemetry.actionstats message. It contains URLs and a fragment of a user input, so we must ensure that no implicit UID is present in this data that is introduced by external actors, in this case Google and the user.

Let us go over how the message is built:

Minimizing information sent back

The first and most important rule: send only what you need, not more.

A typical SERP URL (can be found in your browser’s address bar) looks like this,

https://www.google.de/search?q=ostern&

ie=utf-8&oe=utf-8&client=firefox-b-ab&gfe_rd=cr&ei=2z44WO2pNdGo8weJooGADQ

One would be tempted to send this URL as is, and then extract the query on the server side. But that is dangerous as we cannot be certain that no UID is embedded in the query string.

What is the purpose of &ie=utf-8&oe=utf-8&client=firefox-b-ab&gfe_rd=cr&ei=2z44WO2pNdGo8weJooGADQ? Is any of that data specific to the user so that it could be used as a UID?

It is always safer to sanitize any URL, instead of the raw SERP URL we would send this:

{

"q": "ostern",

"qurl": "https://www.google.de/ (PROTECTED)"

}

The URL has been sanitized through (CliqzHumanWeb.maskURL). The query q itself is also subjected to some sanitization, always required when dealing with user input. In the case of a query, we apply some heuristics to evaluate the risk of the query (CliqzHumanWeb.isSuspiciousQuery). If the query is suspicious the full message will be discarded and nothing will be sent. The query heuristics cover things like,

- query too long (>50 characters)

- too many tokens (>7)

- contains a number longer than 7 digits, fuzzy, e.g. (090)90-2, 5555 3235

- contains and email, fuzzy

- contains a URL with HTTP username or password

- contains a string longer than 12 characters that is classified as Hash (Markov Chain classifier defined at

CliqzHumanWeb.probHashLogM)

Does the CliqzHumanWeb.isSuspiciousQuery guarantee that no personal information will ever be received? No. And that is also true for other heuristics that will be introduced later. That said, one must consider that even in the case that a PII would escape sanitization, either because of a bug or because of lack of coverage, the only thing compromised would be the PII and the record itself. Because sessions in Human Web are not allowed, the damage of a PII failure is contained.

Never send data rendered to the user

The last part of the message is the field r which contains the results returned by Google. One could think that the data is safe, but that might not be always the case.

We cannot rule out the possibility that the user was logged in and that the content of the page (in the case of Google’s SERP) was not customized or personalized. If that was the case, the content could contain elements that could be used as PIIs or UIDs.

It is very dangerous to send any content extracted from a page that is rendered to the user. The only information that can be sent is information that is public, period.

To deal with this problem we rely on what we called doubleFetch. Which is an out of band HTTP GET request to the same URL (or a canonized version of the URL) without session. Clients perform this request by leveraging the fetch API[6].

By doing so, the content is not user-specific as the site has no idea who is issuing the request. The anonymous request does not allow cookies or any other network session. If the site requires authentication, the response of the site will be a redirect to a login page. (There are some caveats to this that will be covered in the next section where we dig further on doubleFetch).

The results in field r are the ones scrapped from the content of the double-fetch rather than from the original content presented to the user.

3. Page Message

In order to have relevant data for search, we need the following:

This is made possible using a message sent by Human Web called page:

{

"ver": "2.7",

"ts": "20191128",

"anti-duplicates": 8441956,

"action": "page",

"type": "humanweb",

"payload": {

"a": 252,

"qr": {

"q": "2 zone gasgrill",

"t": "go",

"d": 1

},

"e": {

"mm": 35,

"sc": 16,

"kp": 0,

"cp": 0,

"md": 5

},

"url": "http://grill-profi-shop.de/",

"st": "200",

"x": {

"ni": 2,

"pagel": "de",

"nl": 56,

"lt": 4472,

"lh": 27647,

"ctry": "de",

"nf": 2,

"iall": true,

"ninh": 2,

"nip": 0,

"canonical_url": "http://grill-profi-shop.de/",

"t": "\nEnders Gasgrill & Grill online kaufen\n"

},

"dur": 160074,

"red": null,

"ref": null

}

}

We use this message to learn that someone has visited the URL http://grill-profi-shop.de/. We also want to learn how users interact with the pages as a proxy to infer the page quality. The page messages are heavily aggregated, for instance,

- field

payload.atells us the amount of time the user has engaged with the page, - field

paylaod.e.mmtells us the number of mouse movements, - field

payload.e.sctells us the number of scrolling events,

The aggregated information about the page is very useful to us. Human Web enables what is a collaborative effort of Cliqz users, and to gather data on how users interact with the page help us figure out its quality and relevance.

All this information is aggregated as the user interacts with the page, once the user closes the page or the page becomes inactive for more than 20 minutes, aggregation stops and the process to decide whether or not the message can be sent starts - the proper page lifecycle is better described in the section below.

Besides engagement, the page message above also contains the field payload.qr, which is only present when the page was visited after a query on a search engine,

"qr": {

"q": "2 zone gasgrill",

"t": "cl",

"d": 1

}

In this case, the page was loaded after a request to Cliqz for the query 2 zone gasgrill. The field t stands for the search engine and d is the recursive depth. qr is only sent if depth is 1, otherwise it could be used to build sessions, we will discuss how payload.qrcan affect record linkage in a follow-up section.

At this point it should be evident why we are interested in the data contained in page messages.

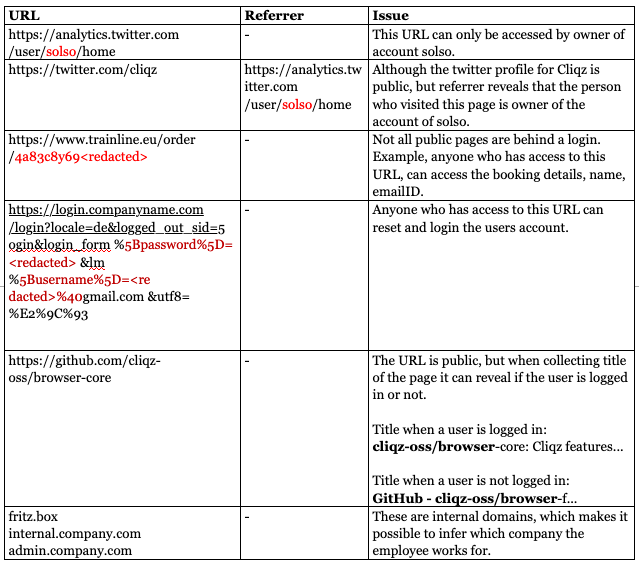

Collecting URLs is a risky proposition, we do not want to collect URLs which are not meant to be public. URLs can contain a lot of private information or enough information that they can lead you to pages which contain private information.

Therefore, before sending any URL back, there are a series of rigorous checks that are performed to determine whether a URL is safe or not and can be sent back. Failing any of the checks, the page is marked as private and Human Web will not send any information about it.

A piece of practical advice - filter everything that you do not know is safe, since determining safeness is far from trivial, it is more sensible to revert the argument: collect only what you know is safe at the time of the design.

Human Web is built on the idea of “Better safe than sorry”, which means, the system might detect some public pages as private and it is fine to drop them, rather than the other way around.

Page Lifecycle

Let us now describe the page lifecycle in more detail.

Human Web does not collect data in private mode, so the details below are only applicable to web pages in normal mode.

We detect a user visiting a web page by monitoring the onLocationChange change event. The following approach is then taken to filter out sensitive pages. If any check fails, processing of that URL is stopped, the page is marked as private and no data is sent back.

Suspicious URL:

We get the URL on the current tab and check if it is acceptable using CliqzHumanWeb.isSuspiciousURL, which checks for:

- Not on odd ports, only 80 and 443 are allowed.

- No HTTP auth URL.

- No domain as IP.

- Protocol must be

httporhttps. - No localhost.

- No hash

#in the URL unless it is a known SERP URL or the text after#is smaller than 10 characters.

If URL passes these first checks we continue, otherwise we stop the process and ignore that page.

The next step is to determine if the URL is a known SERP URL (CliqzHumanWeb.checkSearchURL). If it is, then a message type query is generated (described in the previous section). If it is not a SERP URL we proceed to generate a page type message.

The URL under analysis is kept in memory at CliqzHumanWeb.state['v']. Additional data is aggregated to the object in memory as the user interacts with the page. For instance, number of key presses, mouse movement, referral, whether the page comes out of a query (field payload.qr), etc. We can also aggregate information that will be discarded later on. For instance, we still aggregate the links that the user follows when on the page. In prior versions (below 2.3) we used to send such links as payload.c, provided they meet certain criteria such as same-origin on domain, no query string, etc. Since version 2.3 the whole field is still aggregated but removed before the message is sent at the sanitization step.

Another important piece of data that will be kept is the “page signature”, which is extracted from the content document after a timeout of 2 seconds. The signature looks like the field payload.x on the message above, however, the first signature will not be sent since it is generated from a page rendered to the user. The first page signature is a required input for the doubleFetch process mentioned below.

The page will stay in memory until,

- the page is inactive for more than 20 minutes,

- the user closes the tab containing the page,

- the tab loads another page or

- the user closes the browser (or window).

When the page is unloaded from memory it will be persisted to disk (CliqzHumanWeb.addURLtoDB) and it will be considered as a pending page.

Up to this point, nothing has been sent yet. The information is either in memory or in localStorage.

Every minute, an out-of-band process on the main thread (CliqzHumanWeb.pacemaker) will check for URLs that are no longer active and try to finalize the analysis.

The selection of the URLs to be processed is determined by (CliqzHumanWeb.processUnchecks), a queue that picks pending pages from storage. The number of URLs on the queue depends on the browsing activity of the user; the incoming rate is pages visited (URL on address bar, no 3rd parties or frames), and the outgoing rate is 1 per minute while the browser is opened.

We will evaluate CliqzUtils.isPrivate on the analyzed URL, which returns if the page has been seen before and flagged as private or whether it is unknown. If the URL was already marked as private in the past the process stops and no message is generated.

There are multiple ways a page can be classified as private:

- Because

doubleFetchprocess fails, either it cannot be completed or the signature of the pages after double fetch does not match (more on that later). - Because the URL comes out of a referrer that was private.

- Because the referral chain is too long (>10), typically suspicious pages with odd behaviors that we want to ignore.

If the page is not private we will continue for the doubleFetch to assess whether it is public or not.

Double Fetch

As previously mentioned, a doubleFetch consists in the URL being fetched using an anonymous HTTP(s) request using the client-side fetch API.

The content will be anonymously fetched, parsed and rendered on a hidden window and finally we will obtain the signature of the page:

"x": {

"ni": 2,

"pagel": "de",

"nl": 56,

"lt": 4472,

"lh": 27647,

"ctry": "de",

"nf": 2,

"iall": true,

"ninh": 2,

"nip": 0,

"canonical_url": "http://grill-profi-shop.de/",

"t": "\nEnders Gasgrill & Grill online kaufen\n"

}

The signature of the page contains the canonical URL (if it exists); the title of the page); plus some structural information of the page such as number of input fields (ni), number of input fields of type password (nip), number of forms (np), number of iframes (nif), length of text without html (lt), etc.

At this point we have two page signatures for the URL: a) with the content rendered with the session of the user, x_before. And, b) with the content rendered without the session of the user, x_after, i.e. the content rendered as if some random user had visited the same page.

If the signatures do not match it means that the content of the page is user-specific and that the page should be treated as private. It will be flagged as private and ignored forever. Of course, no message will be sent.

Matching the signatures is defined at CliqzHumanWeb.validDoubleFetch. Note that the match is fuzzy since signatures can differ a bit even in case of public pages. Furthermore, there are many pages that have co-existing private and public versions, e.g. https://github.com/solso, https://twitter.com/solso will have different x_before and x_after signatures depending on if the user solso was logged in or not. However, in both cases the public version of the page is indeed public. Long story short, the function validDoubleFetch controls whether the URL should be considered public or private depending on how the signatures of the pages depart.

Any page that requires a login will fail on the double fetch validation for multiple different reasons: the URL requested anonymously will be unreachable due to redirect towards a login or error page; titles will not match; the structure of the page (e.g. number of passwords or forms) will be too different and so on. If validation fails the page will be flagged as private and never processed again. Furthermore, any URL whose referral is marked as private will also be considered as such.

There is one particular case in which the double fetch method is not effective: when the authorization is based on the fact that the user is on a private network. This setup is often encountered at home and in office environments; access to routers, company wikis, pages whose authorization relies on access through a VPN. In such cases the anonymous requests of double fetch has no effect, because the double fetch is done on the same network. To detect such cases we rely on the IP returned in response headers[7]

Capability URLS

Another aspect to consider is capability URLs, some of which are not protected by any authorization process and simply rely on obfuscation. Google Docs, Github gists, dropbox links, “thank you pages” on e-commerce sites, etc. Some of these capability URLs are meant to be shared but others are not.

Some providers like dropbox.com or github.com are careful enough to mark pages that are meant to be private as noindex pages,

<meta content="noindex">

We flag a page as private if it has been declared not indexable by the site owner. However, not all site owners are so careful. Google Docs for instance does not, and they can contain a fair amount of privacy sensitive and/or PII data.

To detect such URLs we rely on CliqzHumanWeb.dropLongURL that heuristically determines if the URL looks as a potentially capability URL (the name dropLongURL is somewhat legacy of the first version of Human Web where length of the URL was the only heuristic rule). Nowadays there are many more rules to increase coverage, for instance:

- query string (or post # data) is too long (>30),

- segments of the path or the query string are too long (>18),

- segments of the path or the query string as classified as Hashes (Markov Chain classifier defined at

CliqzHumanWeb.probHashLogM), - the URL contains a long number on path or query string,

- the URL contains an email on path or query string

- query string or path contain certain keywords like: admin, share, Weblogic, token, logout, edit, uid, email, pwd, password, ref, track, share, login, session, etc.

The heuristics on CliqzHumanWeb.dropLongURL are quite conservative, meaning that a lot of pages get incorrectly classified as suspicious to be capability URLs and consequently flagged as private. False positives, however, are not such a big deal for our use case.

However, there is no guarantee that a false negative does not slip through the cracks of the heuristics. We routinely check for URLs that reach us and from time to time, not often, we are still able to find URLs that we would rather not receive, we are talking about single digit figures in millions of records.

It is worth noticing that both CliqzHumanWeb.validDoubleFetch and CliqzHumanWeb.dropLongURL have a variable strictness level, controlled by CliqzHumanWeb.calculateStrictness. Both functions are a bit less restrictive if the page has a canonical URL and that referral page contains the URL as a public link in the content loaded on an anonymous request.

Quorum Validation Check

To reduce the probability of collecting capability URLs we have devised a quorum based approach by which a page message will only be sent if more than k people has already seen that URL. This approach if loosely based on k-anonymity, (see CliqzHumanWeb.sendQuorumIncrement and CliqzHumanWeb.safeQuorumCheck for the implementation details).

The browser will send to an external Quorum Service hosted by Cliqz (accessible through the HPN anonymization layer):

INCR & SHA1(u) & ocif it’s the first time that the user visits the URLuin a 30 days period, or,SHA1(u) & ocif the user has visited the URLuin the last 30 days

The field INCR will prevent that repeated visits from the same browser, same user, to increment the quorum counter more than once. Capability URLs are often shared in a certain context; when this context is the workplace or home network it is possible that all the computers have the same public IPv4 address (or a limited range of public IP addresses). That is why the last octet of the IPv4 address oc is also included in the request, so that visits from users in the same networks are only counted once.

The quorum service will keep a tally of how many times someone has attempted to increment (INCR) while having a different last octet on their public IPv4 address to exclude people on the same local network.

Once the quorum threshold is reached (currently set to 5) the server will respond true. Note that the threshold is a lower bound given that the oc can have collisions.

Only when the quorum service responds true will the message proceed down the pipeline. We only apply quorum to URLs of message type page that do not have a qr field and have a path longer than / or that have query strings; for example, https://github.com/ would not be subjected to quorum whereas https://github.com/solso would be.

Preparing the Message

The URL is declared not-private when:

- double fetch validation passes,

- the URL is standard enough so that it does not look like a capability URL,

- The quorum checks also pass.

At this point we are almost done with the analysis, but there are some additional steps.

We perform another round of double-fetch called triple-fetch on a forcefully cleaned-up version of the URL in which we remove either the query string (if it exists) or the last segment of the URL path.

For instance, a URL in the address bar could look like this:

...

http://high-tech-gruenderfonds.de/en/cognex-acquires-3d-vision-company-enshape/

?utm_source=CleverReach&utm_medium=email&

utm_campaign=COGNEX+ACQUIRES+3D+VISION+COMPANY+EnShape&utm_content=Mailing_10747492

...

The query string might contain some redundant data that could be used as implicit UID, or perhaps the data in the query string is needed. There is no easy way to tell, but we can test it.

If we remove the query string:

...

utm_source=CleverReach&utm_medium=email

&utm_campaign=COGNEX+ACQUIRES+3D+VISION+COMPANY+EnShape&utm_content=Mailing_10747492

...

The page signature of the page does not change, so it is safer to send the cleaned-up version of the URL,

http://high-tech-gruenderfonds.de/en/cognex-acquires-3d-vision-company-enshape/

Incidentally, is also the canonical URL,

<link rel="canonical" href="http://high-tech-gruenderfonds.de/en/cognex-acquires-3d-vision-company-enshape/" />

We always prefer to send the minimal version of the URL to minimize the risk of sending data that could be exploited as implicit UIDs.

We would like to emphasize that the signature of the page is not the signature from the original render to the user but the signature of the content of the double or triple fetch.

In this post, triple fetch is introduced after the quorum, but in reality the check happens before.

Ready to Go

At this point the message type page is ready to be sent. We apply one last check to sanitize the message: CliqzHumanWeb.msgSanitize, which takes care of the last steps. It will:

- remove the referral if it does not pass

CliqzHumanWeb.isSuspiciousURLand mask it usingCliqzHumanWeb.maskURL; otherwise, it will - remove any continuation (

payload.c) since we do not really want to send this information, - check the title with

CliqzHumanWeb.isSuspiciousTitle; if it contains long numbers, email addresses and so forth, the whole message will be dropped, - make sure the

payload.urlhas been set to the cleanest URL available (the original, canonical, the forcefully cleaned URL on the triple-fetch) - etc.

Most of the checks on msgSanitize are redundant as they are already taken care of in other parts of the code; still, having one last centralized place to perform sanity checks is highly recommendable.

Parallel History

The lifecycle of the message type involves persistent storage, so we must be careful not to create a parallel history of the user’s browsing.

Extension’s localStorage will store records with the visited URL–as long as it was not already private–plus the signature of the page. The record is created when the user visits the web page and removed once the record has been processed by doubleFetch, on average after 20 minutes. If double fetch were to fail due to network reasons it will be retried three times, if all three fail it will be considered as private and removed to avoid having the URL of the web page orphan in the storage.

URLs flagged as private need to be kept forever, otherwise we would do unnecessary double fetch processing. To maintain the privacy of private URL we do not store them as plain text; instead, we store the truncated MD5 on a bloom filter:

const hash = (md5(url)).slice(0,16);

CliqzHumanWeb.bloomFilter.testSingle(hash);

The bloom filter provides plausible deniability to anyone who wants to prove that the user visited a certain URL.

The same technique of bloom filters is used on the Quorum validation check to know if the URL has been visited in the last 30 days. In this case, an array of bloom filters (CliqzHumanWeb.quorumBloomFilters) is needed–one filter per natural day so that we can discount time periods exceeding 30 days.

Controlled Record Linkage

Messages of type page are susceptible to very limited record linkage due to the fields: qr and ref.

For instance, the field ref can be used to probabilistically link two or more messages of type page but those sessions are bound to be small since ref is forced to pass CliqzHumanWeb.isSuspiciousURL, and if not suspicious, it will also be masked by CliqzHumanWeb.maskURL. So, in practice, referrals are kept at a very general level. For instance,

CliqzHumanWeb.maskURL('http://high-tech-gruenderfonds.de/en/cognex-acquires-3d-vision-company-enshape/?utm_source=CleverReach&utm_medium=email&utm_campaign=COGNEX+ACQUIRES+3D+VISION+COMPANY+EnShape&utm_content=Mailing_10747492')

would yield,

"http://high-tech-gruenderfonds.de/ (PROTECTED)"

Which will be the URL finally used as ref. That still allows for some probabilistic record linkage, but the resulting session would be extremely small.

A similar argument goes for the field qr. The query in qr.q can be used to link the message of type query to the page type message that should follow. This type of two records sessions is in fact harmless since they do not provide additional information that was not already contained in one of the messages.

Why not use approach like differential privacy?

Differential privacy and Human Web are trying to solve the problem of privacy and data from two different perspectives:

Differentially-private data analysis is a principled approach that enables organizations to learn from the majority of their data while simultaneously ensuring that those results do not allow any individual’s data to be distinguished or re-identified. - https://developers.googleblog.com/2019/09/enabling-developers-and-organizations.html

The goal of Human Web is not so much to anonymize datasets that contain sensitive information, for that purpose approaches like Differential privacy, l-diversity, etc. are already there. The Human Web methodology aims to prevent those datasets to be collected in the first place.

There could be other ways to achieve this and we welcome any methodology applied to protect the data. There is one caveat though, the privacy protections have to be applied before sending the data, any solution that sends data and then anonymizes it is not good in our books; simply because there is no guarantee that the raw data is removed.

For our use cases, we believe Human Web is an easier way to get the data we need while respecting the privacy of the user.

Transparency and Controls

- Even though the data collected does not contain any user identifier or PII, users still have an option to opt-out.

- Our source code is open source, in case you want to verify the claims we have made.[8]

- The Cliqz browser comes with an in-built transparency monitor[9] to show the users the data being sent to Cliqz servers. It’s still not complete and needs improvements, but we want to empower users via such features in the future.

- You can also check the network traffic to verify the data being sent out, we send all messages through a 3rd party proxy provider (FoxyProxy) to strip the sender’s IP. We do an additional round of encryption, otherwise the proxy operators could see and modify your data. (More details on this in tomorrow’s post). This means in your network monitoring tool you will see encrypted payloads, therefore you need a few more steps to be able debug the traffic.[10]

Recap

Thanks for reading along until now. Here’s a brief summary of the tenets on which Human Web is built:

- Prevent record linkage – Drop any data point on the client-side that would enable us to build sessions on the backend.

- Minimize what is sent back home – Adding too much data on single message increases risk of implicit identifier.

- Minimize data sent as plain text – The content of the message itself could be dangerous. Therefore, adopt safer representations where actual content is not needed.

- Clean the house – Minimize data stored as plain text on the client side.

- Client-side aggregation at Cliqz is done at the browser level. However, it is perfectly possible to do the same using only standard javascript and HTML5, check out a prototype of a Google Analytics look-alike (part of the Data Collection without Privacy Side-Effects short paper).

Final Words

We do firmly believe that this methodology is a major step forward from the typical server-side aggregation used by the industry. With our unique approach, we mitigate the risk of gathering information that we would rather not have.

The risks for privacy leaks are close to zero, although there is no formal proof of privacy. We would never be able to know things like the list of queries a particular person has done in the last year. Not because our policy on security and privacy prevents us from doing so, but because it cannot be done. The way we collect data makes it technically infeasible for us, even if we were asked to.

Even if you are a website or an analytics company, you can still apply the Human Web approach of collecting data without record linkability and yet be able to solve complex business intelligence tasks. You can read more about this in our paper Data Collection without Privacy Side-Effects.

In our opinion, the Human Web is a Copernican shift in the way data is collected. We always welcome constructive feedback and bug reports via email to privacy [at] cliqz [dot] com.

Footnotes

In fact, most of the URLs are dropped thanks to these strict checks. ↩︎

For instance, Google Analytics data can be used to build sessions that can in turn potentially enable de-anonymization of a user by anyone who has access to said data. We showed in Section 2 of our paper Data Collection without Privacy Side-Effects that side-effects are unavoidable. ↩︎

To open the Transparency Monitor, select the respective option under Search settings in the Cliqz Control Center (Q icon next to the URL bar). ↩︎

Use the Cliqz extension in Firefox or build the extension from source.

- Open

about:debuggingand keep this tab open. - Select

This Firefox. - Make sure that the “Enable add-on debugging” box is checked.

- Go to Inspect -> Debug to open the developer tools.

- In the console tab, execute the following commands:

CLIQZ.app.modules.hpnv2.background.manager.log = console.log. - All messages will then be logged and you can inspect them.

- In addition, you can turn off the encryption of the payload with this command:

CLIQZ.app.prefs.set('hpnv2.plaintext', true). - We strictly recommend to only use this setting for debugging purposes.

- Open